What could be more refreshig than setting up a nice little EVPN-VXLAN on your vQFX just for fun?

This blog post will show you how to do it and break down the important parts.

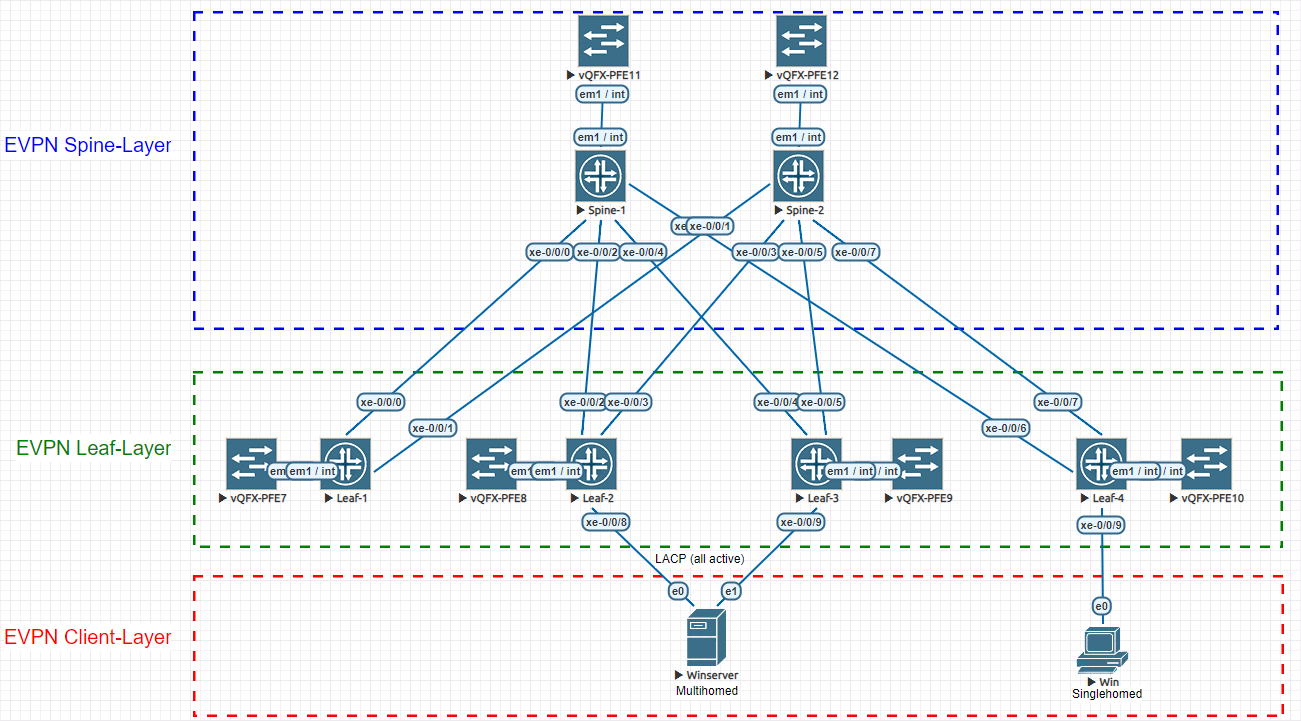

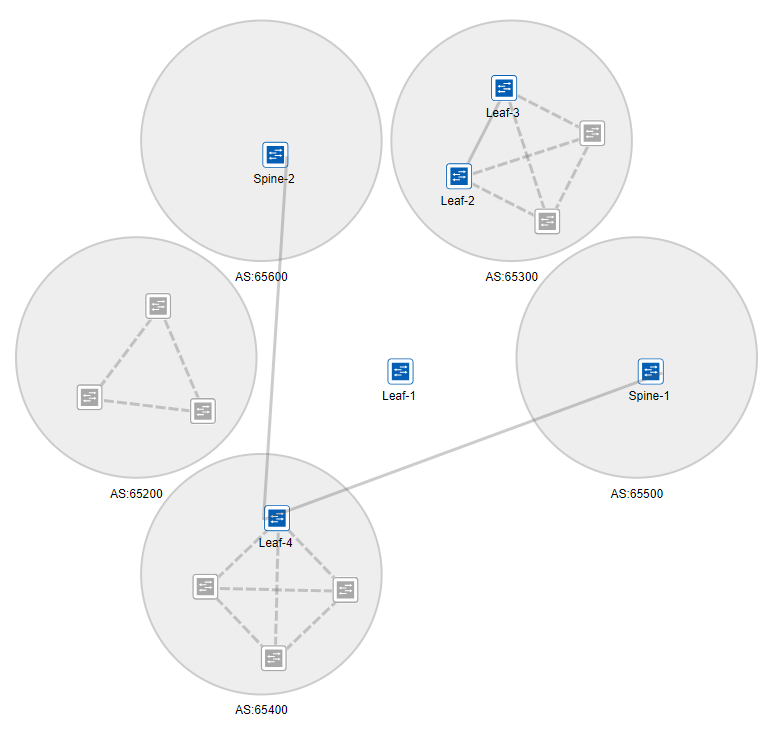

We will be looking at the following topology (designed on EVE-NG) and implement an EVPN-VXLAN spine and leaf config so that our virtual servers named Win and Winserver are able to communicate with each other. On top we will configure Winserver for multihoming:

Click image to enlarge

In case you already know EVPN and just want to take a look at the sample-config, you can jump at the end of this blog post, where I post the full code for the setup (excluding Windows Server NIC-Teaming with LACP). If you want to know how to use NIC-Teaming on Windows Server 2016 simply go to google – there are tons of instructions (with LACP and without) but this setup uses one with LACP.

Step1: Create the Topology itself.

Before implementing EVPN-VXLAN you should carefully think about your setup. I’ve seen a lot of setups where the spine and leaf topology is placed “unoptimal” and later on might cause trouble. Therefore I advise you to think carefully about the topology itself and also about the surrondings that you might need (like route-reflectors or VC-Fabrics). Once you got that out of the way it’s time to sit down and build the setup and prepare the connections needed – for my tests I usually use EVE-NG because with EVE-NG it’s very easy to “patch” all the cords needed on the fly (with Pro even when the devices are powered on). I advise you to always PoC a setup like this to avoid unnecessary surprises when implementing it. Usually when prepping this and doing a PoC there are many things that you might see different after the PoC – this will help you to find the optimal setup for your company.

We start with the connections from Spine-1 to all four leaf devices:

set interfaces xe-0/0/0 unit 0 description “to Leaf 1”

set interfaces xe-0/0/0 unit 0 family inet address 172.16.1.100/24

set interfaces xe-0/0/2 unit 0 description “to Leaf 2”

set interfaces xe-0/0/2 unit 0 family inet address 172.16.3.100/24

set interfaces xe-0/0/4 unit 0 description “to Leaf 3”

set interfaces xe-0/0/4 unit 0 family inet address 172.16.5.100/24

set interfaces xe-0/0/6 unit 0 description “to Leaf 4”

set interfaces xe-0/0/6 unit 0 family inet address 172.16.7.100/24

You can edit the adress-schema as you like – I personally use the 172.16/16 quite often for my labs and setups, where I need private v4 adresses.

Also you should configure lo0-adresses for management and later for identification of your local device:

set interfaces lo0 unit 0 family inet address 172.16.50.1/32

As last part of this step you can already configure your xe/ae-Interface towards your server(s) and equip it with an esi-number:

set interfaces xe-0/0/8 description “to Server”

set interfaces xe-0/0/8 ether-options 802.3ad ae0

set interfaces ae0 encapsulation ethernet-bridge

set interfaces ae0 esi 00:01:01:01:01:01:01:01:01:01

set interfaces ae0 esi all-active

set interfaces ae0 aggregated-ether-options lacp active

set interfaces ae0 aggregated-ether-options lacp periodic fast

set interfaces ae0 aggregated-ether-options lacp system-id 00:00:00:01:01:01

set interfaces ae0 unit 0 family ethernet-switching vlan members vlan10

Step 2: Create the Underlay

Next, you should define some local system settings for your underlay network:

set routing-options router-id 172.16.50.1

set routing-options autonomous-system 65500

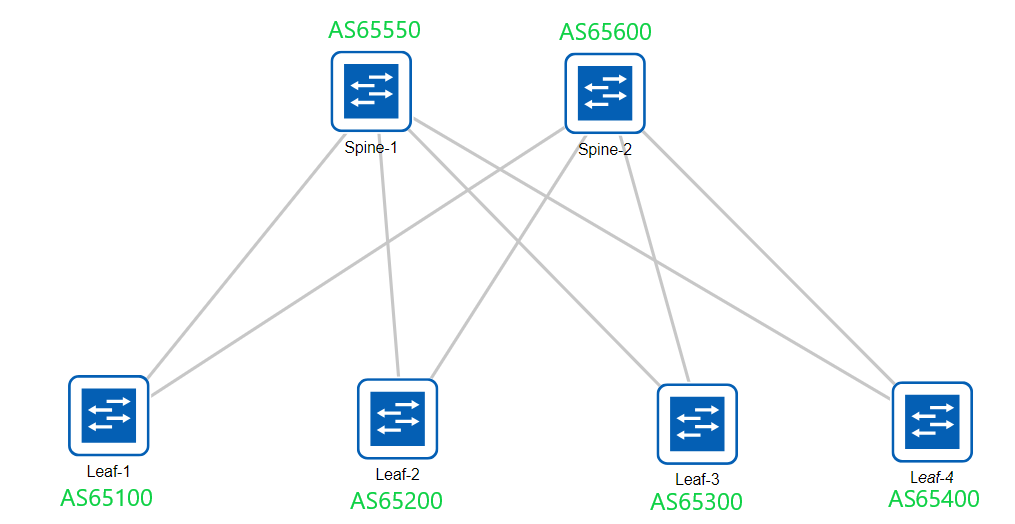

Your underlay will be vital for your Overlay – obvious right? But this part can be tricky – especially with route reflectors. In our case, we will make it simple by using plain EBGP.

Why EBGP? Because with EBGP you can scale out your EVPN to infinity and beyond 😉

You could, of course, use OSPF for your underlay network but the drawback is scaling – most customers use EVPN because VC, VCF, JunOSFusion and so on do not scale out the way EVPN does it.

And when comparing OSPF vs BGP in terms of scale – well – you already know who will win, right?

So we start with creating a group called “underlay”. I personally would advise you to use a naming that fits

set protocols bgp group underlay type external

set protocols bgp group underlay description “to Spines 1/2”

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.3.100 peer-as 65500

set protocols bgp group underlay neighbor 172.16.4.100 peer-as 65600

You should also create a Policy to export your directly connected networks to the underlay so that you have full-mesh connectivity and your loopback-adresses will be redistributed into your BGP underlay:

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

After doing this on all spines / leafes (of course with different AS-numbers and IP’s) you should have a nice BGP-Fabric.

Time for the next step – the overlay

Step 3: Create the EVPN-VXLAN Overlay

Now it’s time for the funny part – the EVPN-VXLAN overlay.

Start by adding an overlay group for your MP-IBGP connection between the leaf

set protocols

set protocols

set protocols

set protocols

set protocols

set protocols

set protocols

set protocols

Now it’s time for your loopback-address to really shine.

Specify the loopback interface as the source address for the VTEP tunnel and also, specify a route distinguisher to uniquely identify routes sent from this device:

set switch-options

set switch-options route-distinguisher 172.16.20.1:1

Doesn’t look scary if you break it down, right?

The key in complex setups is to break the config down to smaller parts.

This way, you can solve almost any problem.

Next, you specify the VRF-Import and Export Policy and add your EVPN-Protocol Options regarding VNI’s and the Multicast-Mode: :

set switch-options

set switch-options

set protocols evpn

set protocols evpn encapsulation

set protocols evpn multicast-mode ingress-replication

set protocols evpn extended-

Following up with the VRF import policy to accept EVPN routes advertised from your other leaf devices:

set policy-options policy-statement LEAF-IN term import_leaf_esi from community comm-leaf_esi

set policy-options policy-statement LEAF-IN term import_leaf_esi then accept

set policy-options policy-statement LEAF-IN term import_vni10 from community com10

set policy-options policy-statement LEAF-IN term import_vni10 then accept

We also set the community targets and configure some load balancing:

set policy-options community com10 members target:1:10

set policy-options community comm-leaf_esi members target:9999:9999

set policy-options policy-statement

set routing-options forwarding-table export

Finally, we define a server facing VLAN (in our example vlan 10) and equip it with a VNI number:

set vlans vlan10 vlan-id 10

set vlans vlan10 vxlan vni 10

set vlans vlan10 vxlan ingress-node-replication

Step 4: Add your Clients and verify the Setup

Congrats – your EVPN is just a commit away. Do it – this part is about what happens “after the BANG”. At this point your EVPN should be up and running – YAY. But what now? How can we check what the EVPN does for us? Lets get to it.

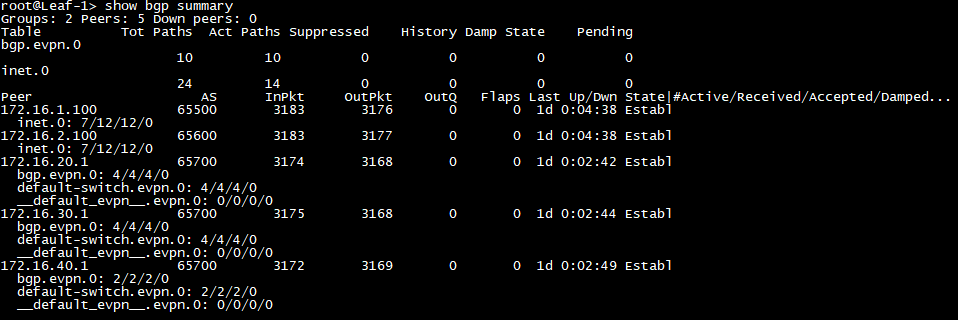

As you can see, in the given Topology, our bgp receives routes from the underlay and also our bgp

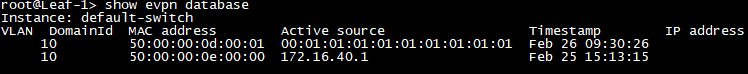

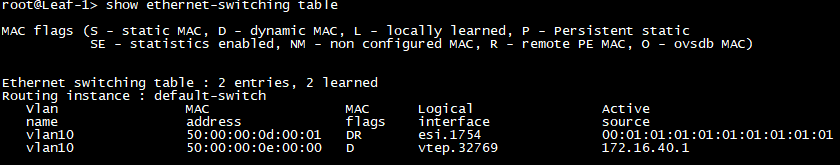

Sweet – our 2 Hosts are already inside the EVPN-Database and as you can see, one is multihomed and one is single-homed. You can immediately see that because the Active source is different. While the multihomed Server is sourced from an esi (hence the esi-number as source) so basically from multiple leaf devices, the single-homed device is sourced from the leaf device with the loopback address 172.16.40.1 (Leaf-4).

Both of them are also added to each leaf devices local ethernet-switching table. So regardless of the Leaf you connect the Devices to, they will have full Layer-2 reachability across your EVPN-VXLAN – AWESOME! You can simply test this, by pinging from one Device to another 😉

Now imagine each leaf device resides in a different DC or Building – no more do you need to worry about DC stretching – all you need is a solid underlay to build your Infrastructure on. With Contrail, managing your EVPN-VXLAN is even more convenient – but that will be written in a later blog post.

I also tried to add it to JunOS Space, because you can (thanks to EVE-NG) link your Lab to the real world and discover all the devices into your JunOS Space. I was actually impressed, that the vQFX can be added to Space (just discover them with ping only and add snmp later, else the discovery will fail because of the snmp string that is used by the vQFX).

With the “IP Connectivity” however, Space seems to be a bit drunk – but since I only wanted to see if it roughly could manage my EVPN. I would say: Not at this point 😀

Hopefully you now have less fear, when someone mentiones EVPN-VXLAN.

And for those who came here just to snag the conig to play with it, here it is 😉

Spine-1:

set version 17.4R1.16

set system host-name Spine-1

set system root-authentication encrypted-password "$6$rB5kPIFJ$91QMtJeCLoVn1o.TN5fPMhQF44MyQXrN0yfMn4Br6lasdBcdyX.XuHE7zYdAC8t4M07icNaSjlusHlVdu4Bxy."

set system root-authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system login user vagrant uid 2000

set system login user vagrant class super-user

set system login user vagrant authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system services ssh root-login allow

set system services netconf ssh

set system services rest http port 8080

set system services rest enable-explorer

set system syslog user * any emergency

set system syslog file messages any notice

set system syslog file messages authorization info

set system syslog file interactive-commands interactive-commands any

set system extensions providers juniper license-type juniper deployment-scope commercial

set system extensions providers chef license-type juniper deployment-scope commercial

set interfaces xe-0/0/0 unit 0 description "to Leaf 1"

set interfaces xe-0/0/0 unit 0 family inet address 172.16.1.100/24

set interfaces xe-0/0/2 unit 0 description "to Leaf 2"

set interfaces xe-0/0/2 unit 0 family inet address 172.16.3.100/24

set interfaces xe-0/0/4 unit 0 description "to Leaf 3"

set interfaces xe-0/0/4 unit 0 family inet address 172.16.5.100/24

set interfaces xe-0/0/6 unit 0 description "to Leaf 4"

set interfaces xe-0/0/6 unit 0 family inet address 172.16.7.100/24

set interfaces em0 unit 0 family inet dhcp

set interfaces em1 unit 0 family inet address 169.254.0.2/24

set interfaces lo0 unit 0 family inet address 172.16.50.1/32

set forwarding-options storm-control-profiles default all

set routing-options router-id 172.16.50.1

set routing-options autonomous-system 65500

set protocols bgp group underlay type external

set protocols bgp group underlay description "to Leaf 1/2/3/4"

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.1.1 peer-as 65100

set protocols bgp group underlay neighbor 172.16.3.1 peer-as 65200

set protocols bgp group underlay neighbor 172.16.5.1 peer-as 65300

set protocols bgp group underlay neighbor 172.16.7.1 peer-as 65400

set protocols igmp-snooping vlan default

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

set vlans default vlan-id 1Spine-2:

set version 17.4R1.16

set system host-name Spine-2

set system root-authentication encrypted-password "$6$rB5kPIFJ$91QMtJeCLoVn1o.TN5fPMhQF44MyQXrN0yfMn4Br6lasdBcdyX.XuHE7zYdAC8t4M07icNaSjlusHlVdu4Bxy."

set system root-authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system login user vagrant uid 2000

set system login user vagrant class super-user

set system login user vagrant authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system services ssh root-login allow

set system services netconf ssh

set system services rest http port 8080

set system services rest enable-explorer

set system syslog user * any emergency

set system syslog file messages any notice

set system syslog file messages authorization info

set system syslog file interactive-commands interactive-commands any

set system extensions providers juniper license-type juniper deployment-scope commercial

set system extensions providers chef license-type juniper deployment-scope commercial

set interfaces xe-0/0/1 unit 0 description "to Leaf 1"

set interfaces xe-0/0/1 unit 0 family inet address 172.16.2.100/24

set interfaces xe-0/0/3 unit 0 description "to Leaf 2"

set interfaces xe-0/0/3 unit 0 family inet address 172.16.4.100/24

set interfaces xe-0/0/5 unit 0 description "to Leaf 3"

set interfaces xe-0/0/5 unit 0 family inet address 172.16.6.100/24

set interfaces xe-0/0/7 unit 0 description "to Leaf 4"

set interfaces xe-0/0/7 unit 0 family inet address 172.16.8.100/24

set interfaces em0 unit 0 family inet dhcp

set interfaces em1 unit 0 family inet address 169.254.0.2/24

set interfaces lo0 unit 0 family inet address 172.16.60.1/32

set forwarding-options storm-control-profiles default all

set routing-options router-id 172.16.60.1

set routing-options autonomous-system 65600

set protocols bgp group underlay type external

set protocols bgp group underlay description "to Leaf 1/2/3/4"

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.2.1 peer-as 65100

set protocols bgp group underlay neighbor 172.16.4.1 peer-as 65200

set protocols bgp group underlay neighbor 172.16.6.1 peer-as 65300

set protocols bgp group underlay neighbor 172.16.8.1 peer-as 65400

set protocols igmp-snooping vlan default

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

set vlans default vlan-id 1Leaf-1:

set version 17.4R1.16

set system host-name Leaf-1

set system root-authentication encrypted-password "$6$rB5kPIFJ$91QMtJeCLoVn1o.TN5fPMhQF44MyQXrN0yfMn4Br6lasdBcdyX.XuHE7zYdAC8t4M07icNaSjlusHlVdu4Bxy."

set system root-authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system login user vagrant uid 2000

set system login user vagrant class super-user

set system login user vagrant authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system services ssh root-login allow

set system services netconf ssh

set system services rest http port 8080

set system services rest enable-explorer

set system syslog user * any emergency

set system syslog file messages any notice

set system syslog file messages authorization info

set system syslog file interactive-commands interactive-commands any

set system extensions providers juniper license-type juniper deployment-scope commercial

set system extensions providers chef license-type juniper deployment-scope commercial

set interfaces xe-0/0/0 unit 0 description "to Spine 1"

set interfaces xe-0/0/0 unit 0 family inet address 172.16.1.1/24

set interfaces xe-0/0/1 unit 0 description "to Spine 2"

set interfaces xe-0/0/1 unit 0 family inet address 172.16.2.1/24

set interfaces em0 unit 0 family inet dhcp

set interfaces em1 unit 0 family inet address 169.254.0.2/24

set interfaces lo0 unit 0 family inet address 172.16.10.1/32

set forwarding-options storm-control-profiles default all

set routing-options router-id 172.16.10.1

set routing-options autonomous-system 65100

set routing-options forwarding-table export loadbalance

set protocols bgp group underlay type external

set protocols bgp group underlay description "to Spine 1/2"

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.1.100 peer-as 65500

set protocols bgp group underlay neighbor 172.16.2.100 peer-as 65600

set protocols bgp group overlay type internal

set protocols bgp group overlay local-address 172.16.10.1

set protocols bgp group overlay family evpn signaling

set protocols bgp group overlay local-as 65700

set protocols bgp group overlay multipath

set protocols bgp group overlay neighbor 172.16.20.1

set protocols bgp group overlay neighbor 172.16.30.1

set protocols bgp group overlay neighbor 172.16.40.1

set protocols evpn vni-options vni 10 vrf-target export target:1:10

set protocols evpn encapsulation vxlan

set protocols evpn multicast-mode ingress-replication

set protocols evpn extended-vni-list 10

set protocols igmp-snooping vlan default

set policy-options policy-statement LEAF-IN term import_leaf_esi from community comm-leaf_esi

set policy-options policy-statement LEAF-IN term import_leaf_esi then accept

set policy-options policy-statement LEAF-IN term import_vni10 from community com10

set policy-options policy-statement LEAF-IN term import_vni10 then accept

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

set policy-options policy-statement loadbalance then load-balance per-packet

set policy-options community com10 members target:1:10

set policy-options community comm-leaf_esi members target:9999:9999

set switch-options vtep-source-interface lo0.0

set switch-options route-distinguisher 172.16.10.1:1

set switch-options vrf-import LEAF-IN

set switch-options vrf-target target:9999:9999

set vlans default vlan-id 1

set vlans vlan10 vlan-id 10

set vlans vlan10 vxlan vni 10

set vlans vlan10 vxlan ingress-node-replicationLeaf-2:

set version 17.4R1.16

set system host-name Leaf-2

set system root-authentication encrypted-password "$6$rB5kPIFJ$91QMtJeCLoVn1o.TN5fPMhQF44MyQXrN0yfMn4Br6lasdBcdyX.XuHE7zYdAC8t4M07icNaSjlusHlVdu4Bxy."

set system root-authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system login user vagrant uid 2000

set system login user vagrant class super-user

set system login user vagrant authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system services ssh root-login allow

set system services netconf ssh

set system services rest http port 8080

set system services rest enable-explorer

set system syslog user * any emergency

set system syslog file messages any notice

set system syslog file messages authorization info

set system syslog file interactive-commands interactive-commands any

set system extensions providers juniper license-type juniper deployment-scope commercial

set system extensions providers chef license-type juniper deployment-scope commercial

set chassis aggregated-devices ethernet device-count 1

set interfaces xe-0/0/2 unit 0 description "to Spine 1"

set interfaces xe-0/0/2 unit 0 family inet address 172.16.3.1/24

set interfaces xe-0/0/3 unit 0 description "to Spine 2"

set interfaces xe-0/0/3 unit 0 family inet address 172.16.4.1/24

set interfaces xe-0/0/8 description "to Server"

set interfaces xe-0/0/8 ether-options 802.3ad ae0

set interfaces ae0 encapsulation ethernet-bridge

set interfaces ae0 esi 00:01:01:01:01:01:01:01:01:01

set interfaces ae0 esi all-active

set interfaces ae0 aggregated-ether-options lacp active

set interfaces ae0 aggregated-ether-options lacp periodic fast

set interfaces ae0 aggregated-ether-options lacp system-id 00:00:00:01:01:01

set interfaces ae0 unit 0 family ethernet-switching vlan members vlan10

set interfaces em0 unit 0 family inet dhcp

set interfaces em1 unit 0 family inet address 169.254.0.2/24

set interfaces lo0 unit 0 family inet address 172.16.20.1/32

set forwarding-options storm-control-profiles default all

set routing-options router-id 172.16.20.1

set routing-options autonomous-system 65200

set routing-options forwarding-table export loadbalance

set protocols bgp group underlay type external

set protocols bgp group underlay description "to Spine 1/2"

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.3.100 peer-as 65500

set protocols bgp group underlay neighbor 172.16.4.100 peer-as 65600

set protocols bgp group overlay type internal

set protocols bgp group overlay local-address 172.16.20.1

set protocols bgp group overlay family evpn signaling

set protocols bgp group overlay local-as 65700

set protocols bgp group overlay multipath

set protocols bgp group overlay neighbor 172.16.10.1

set protocols bgp group overlay neighbor 172.16.30.1

set protocols bgp group overlay neighbor 172.16.40.1

set protocols evpn vni-options vni 10 vrf-target export target:1:10

set protocols evpn encapsulation vxlan

set protocols evpn multicast-mode ingress-replication

set protocols evpn extended-vni-list 10

set protocols igmp-snooping vlan default

set policy-options policy-statement LEAF-IN term import_leaf_esi from community comm-leaf_esi

set policy-options policy-statement LEAF-IN term import_leaf_esi then accept

set policy-options policy-statement LEAF-IN term import_vni10 from community com10

set policy-options policy-statement LEAF-IN term import_vni10 then accept

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

set policy-options policy-statement loadbalance then load-balance per-packet

set policy-options community com10 members target:1:10

set policy-options community comm-leaf_esi members target:9999:9999

set switch-options vtep-source-interface lo0.0

set switch-options route-distinguisher 172.16.20.1:1

set switch-options vrf-import LEAF-IN

set switch-options vrf-target target:9999:9999

set vlans default vlan-id 1

set vlans vlan10 vlan-id 10

set vlans vlan10 vxlan vni 10

set vlans vlan10 vxlan ingress-node-replicationLeaf-3:

set version 17.4R1.16

set system host-name Leaf-3

set system root-authentication encrypted-password "$6$rB5kPIFJ$91QMtJeCLoVn1o.TN5fPMhQF44MyQXrN0yfMn4Br6lasdBcdyX.XuHE7zYdAC8t4M07icNaSjlusHlVdu4Bxy."

set system root-authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system login user vagrant uid 2000

set system login user vagrant class super-user

set system login user vagrant authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system services ssh root-login allow

set system services netconf ssh

set system services rest http port 8080

set system services rest enable-explorer

set system syslog user * any emergency

set system syslog file messages any notice

set system syslog file messages authorization info

set system syslog file interactive-commands interactive-commands any

set system extensions providers juniper license-type juniper deployment-scope commercial

set system extensions providers chef license-type juniper deployment-scope commercial

set chassis aggregated-devices ethernet device-count 1

set interfaces xe-0/0/4 unit 0 description "to Spine 1"

set interfaces xe-0/0/4 unit 0 family inet address 172.16.5.1/24

set interfaces xe-0/0/5 unit 0 description "to Spine 2"

set interfaces xe-0/0/5 unit 0 family inet address 172.16.6.1/24

set interfaces xe-0/0/9 description "to Server"

set interfaces xe-0/0/9 ether-options 802.3ad ae0

set interfaces ae0 encapsulation ethernet-bridge

set interfaces ae0 esi 00:01:01:01:01:01:01:01:01:01

set interfaces ae0 esi all-active

set interfaces ae0 aggregated-ether-options lacp active

set interfaces ae0 aggregated-ether-options lacp periodic fast

set interfaces ae0 aggregated-ether-options lacp system-id 00:00:00:01:01:01

set interfaces ae0 unit 0 family ethernet-switching vlan members vlan10

set interfaces em0 unit 0 family inet dhcp

set interfaces em1 unit 0 family inet address 169.254.0.2/24

set interfaces lo0 unit 0 family inet address 172.16.30.1/32

set forwarding-options storm-control-profiles default all

set routing-options router-id 172.16.30.1

set routing-options autonomous-system 65300

set routing-options forwarding-table export loadbalance

set protocols bgp group underlay type external

set protocols bgp group underlay description "to Spine 1/2"

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.5.100 peer-as 65500

set protocols bgp group underlay neighbor 172.16.6.100 peer-as 65600

set protocols bgp group overlay type internal

set protocols bgp group overlay local-address 172.16.30.1

set protocols bgp group overlay family evpn signaling

set protocols bgp group overlay local-as 65700

set protocols bgp group overlay multipath

set protocols bgp group overlay neighbor 172.16.10.1

set protocols bgp group overlay neighbor 172.16.20.1

set protocols bgp group overlay neighbor 172.16.40.1

set protocols evpn vni-options vni 10 vrf-target export target:1:10

set protocols evpn encapsulation vxlan

set protocols evpn multicast-mode ingress-replication

set protocols evpn extended-vni-list 10

set protocols igmp-snooping vlan default

set policy-options policy-statement LEAF-IN term import_leaf_esi from community comm-leaf_esi

set policy-options policy-statement LEAF-IN term import_leaf_esi then accept

set policy-options policy-statement LEAF-IN term import_vni10 from community com10

set policy-options policy-statement LEAF-IN term import_vni10 then accept

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

set policy-options policy-statement loadbalance then load-balance per-packet

set policy-options community com10 members target:1:10

set policy-options community comm-leaf_esi members target:9999:9999

set switch-options vtep-source-interface lo0.0

set switch-options route-distinguisher 172.16.30.1:1

set switch-options vrf-import LEAF-IN

set switch-options vrf-target target:9999:9999

set vlans default vlan-id 1

set vlans vlan10 vlan-id 10

set vlans vlan10 vxlan vni 10

set vlans vlan10 vxlan ingress-node-replicationLeaf-4:

set version 17.4R1.16

set system host-name Leaf-4

set system root-authentication encrypted-password "$6$rB5kPIFJ$91QMtJeCLoVn1o.TN5fPMhQF44MyQXrN0yfMn4Br6lasdBcdyX.XuHE7zYdAC8t4M07icNaSjlusHlVdu4Bxy."

set system root-authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system login user vagrant uid 2000

set system login user vagrant class super-user

set system login user vagrant authentication ssh-rsa "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ== vagrant insecure public key"

set system services ssh root-login allow

set system services netconf ssh

set system services rest http port 8080

set system services rest enable-explorer

set system syslog user * any emergency

set system syslog file messages any notice

set system syslog file messages authorization info

set system syslog file interactive-commands interactive-commands any

set system extensions providers juniper license-type juniper deployment-scope commercial

set system extensions providers chef license-type juniper deployment-scope commercial

set interfaces xe-0/0/6 unit 0 description "to Spine 1"

set interfaces xe-0/0/6 unit 0 family inet address 172.16.7.1/24

set interfaces xe-0/0/7 unit 0 description "to Spine 2"

set interfaces xe-0/0/7 unit 0 family inet address 172.16.8.1/24

set interfaces xe-0/0/9 description "to Client"

set interfaces xe-0/0/9 encapsulation ethernet-bridge

set interfaces xe-0/0/9 unit 0 family ethernet-switching vlan members vlan10

set interfaces em0 unit 0 family inet dhcp

set interfaces em1 unit 0 family inet address 169.254.0.2/24

set interfaces lo0 unit 0 family inet address 172.16.40.1/32

set forwarding-options storm-control-profiles default all

set routing-options router-id 172.16.40.1

set routing-options autonomous-system 65400

set routing-options forwarding-table export loadbalance

set protocols bgp group underlay type external

set protocols bgp group underlay description "to Spine 1/2"

set protocols bgp group underlay export directs

set protocols bgp group underlay multipath multiple-as

set protocols bgp group underlay neighbor 172.16.7.100 peer-as 65500

set protocols bgp group underlay neighbor 172.16.8.100 peer-as 65600

set protocols bgp group overlay type internal

set protocols bgp group overlay local-address 172.16.40.1

set protocols bgp group overlay family evpn signaling

set protocols bgp group overlay local-as 65700

set protocols bgp group overlay multipath

set protocols bgp group overlay neighbor 172.16.10.1

set protocols bgp group overlay neighbor 172.16.20.1

set protocols bgp group overlay neighbor 172.16.30.1

set protocols evpn vni-options vni 10 vrf-target export target:1:10

set protocols evpn encapsulation vxlan

set protocols evpn multicast-mode ingress-replication

set protocols evpn extended-vni-list 10

set protocols igmp-snooping vlan default

set policy-options policy-statement LEAF-IN term import_leaf_esi from community comm-leaf_esi

set policy-options policy-statement LEAF-IN term import_leaf_esi then accept

set policy-options policy-statement LEAF-IN term import_vni10 from community com10

set policy-options policy-statement LEAF-IN term import_vni10 then accept

set policy-options policy-statement directs term 1 from protocol direct

set policy-options policy-statement directs term 1 then accept

set policy-options policy-statement loadbalance then load-balance per-packet

set policy-options community com10 members target:1:10

set policy-options community comm-leaf_esi members target:9999:9999

set switch-options vtep-source-interface lo0.0

set switch-options route-distinguisher 172.16.40.1:1

set switch-options vrf-import LEAF-IN

set switch-options vrf-target target:9999:9999

set vlans default vlan-id 1

set vlans vlan10 vlan-id 10

set vlans vlan10 vxlan vni 10

set vlans vlan10 vxlan ingress-node-replication

How many Memory RAM to install vMX? i have installed vMX but it doesnt start.

Hi togi,

for the vMX you need 2GB of RAM for the VCP and 4GB of RAM for the VFP (so 6GB per vMX).

Hi Chris

How did you run Junos Space in EVE-NG? Thank you.

Tony

Hi Tony,

the template for JunOS Space is already defined in EVE-NG. Just download the qcow2 (KVM) from Juniper, upload it to your EVE-NG and start using it. I prefer Version 18.4 or newer, since you already have the Juniper App-Store on SPace to download ND and SD.

Hi Chris

Thank you for your reply. I mean how to access JunOS Space. I’ve already booted up. Do we use VNC or SSH to accees it? Thank you.

Tony

The “Console” is VNC, after initial config you can access it via ssh.

Thx a lot Chris!

What version of the vQFX was used in your topology? It appears a routing-instance with instance-type virtual-switch might be required to configure protocols evpn in version 17.4R1.16. Thanks!

Hi Alan,

17.4 – the “virtual-switch” is mainly used on the vMX.

BR

Chris

Hi Crhis,

I’m trying to do multihoming on vqfx as you did above, my other leaf is cisco nxos 9000v. 9Kv safely gets type-1 and 4 updates from vqfx, but type-2 routes are ignored. When I debug bgp updates on 9Kv, I see that it ignores type-2 because they have a ethernet tag id value set to a non-zero value. Is there a way to change this so vQFX does set ethernet tag id to 0 on outgoing type-2 updates?

Thanks!

Hi Serdar,

I haven’t tried that yet but will look into it.

Where do you terminate L3 for your hosts?

I implemented a Centrally Routed Bridging Overlay here. The inter-vni routing would be taking place at the spine-level.

Can you elaborate on this config a bit? I am having a hard time figuring out where your default gateway for your servers live. I can’t find an irb or anycast gateway for vlan 10 in your config.

Hi Dan,

that part of the config is not shown here.

What you see above is the Bridged Overlay (I attached a vSRX to the full topology which is doing the GW Stuff) but you could easily configure some irb’s on the spine for the CRB or on the Leaf for the ERB Design.

That depends on what you want to configure

Dear Christian

I have configure my topology same as you

but it’s not working

My apolojyze, its working

I make some mistakes with entering AS Number

Glad it works now 🙂

Dear Christian,

Great work. Planning to build same setup.

Can you please tell how much ram does one vqfx need? If I have to build same setup like yours, how much RAM and CPU is enough?

Thanks a lot,

it’s a lot of help

RE 2vCPU and 2GB RAM

PFE 2vCPU and 4GB RAM

Thanks .. means i gotta get a high-end server.

what would be the server specification for this topology Chris?

i have one at GPC with 8 vCPU and 30 GB RAM but having issue bringing the vQFX up.

It’s roughly 6GB for each vQFX of RAM and you should have at least 2 vCPU per vQFX to make it really shine. 8vCPU for this topology is a little slim. Bare-Minimum would be 12vCPU (1 per Device if you don’t spi up the Client VPC’s)

Many thanks for this very informative article on vxlan and evpn. I am able to run your example on Eve NG on my home lab Intel NUC with 32Gig memory without any hassle. Even with slightly trimmed down memory for both the routing engine and PFE (vqfx) on 18.4

root@Leaf-1> show ethernet-switching table

MAC flags (S – static MAC, D – dynamic MAC, L – locally learned, P – Persistent static

SE – statistics enabled, NM – non configured MAC, R – remote PE MAC, O – ovsdb MAC)

Ethernet switching table : 4 entries, 4 learned

Routing instance : default-switch

Vlan MAC MAC Logical Active

name address flags interface source

vlan10 00:50:79:66:68:0d D xe-0/0/10.0

vlan10 00:50:79:66:68:0f D vtep.32770 172.16.30.1

vlan20 00:50:79:66:68:0e D vtep.32771 172.16.20.1

vlan20 00:50:79:66:68:10 D vtep.32769 172.16.40.1

What’s up, just wanted to say, I liked this blog post.

It was inspiring. Keep on posting!

Thanks – glad you liked it 🙂

Amazing post, clear and simple

I am struggling to have EVE-NG working with vQFXs

Links randomly stop forwarding traffic and drop queues are full

For similar topology with evpn-vxlan ,the mac advertising is random

Any idea how can I possibly make it more stable / reliable ?

Hi Bro,

Please clear me

Which one is correct for vqfx ,

vQFX RE: 2 vCPU, 2G RAM

vQFX PFE: 2 vCPU, 4G RAM

OR

vQFX RE: 2 vCPU, 4G RAM

vQFX PFE: 2 vCPU, 2G RAM

Please see this video https://www.youtube.com/watch?v=8HUCsXLBvjU

BR

Sayed

Hello,

Great work! How did you test with a Winserver (Multihome)? What image do I have to load to EVE-NG to have that?

Any WIndows-Server image will do – just make sure to have 2 Interfaces assigned to the WIndows image 🙂