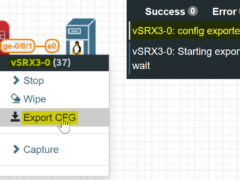

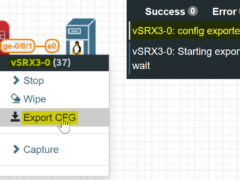

Create a config export script for the vSRX 3.0 template

Some time ago I blogged about a custom template for the vSRX 3.0. One drawback was, that the config-export would no longer work, since this is a custom template but… Read more »

Some time ago I blogged about a custom template for the vSRX 3.0. One drawback was, that the config-export would no longer work, since this is a custom template but… Read more »

Have you ever noticed that your vSRX, vMX and vQFX run on insane CPU percentage? Well yes, you might think because on DPDK Hosts (I wrote about that earlier), the… Read more »

Just FYI – 15.1X49-D160 for the vSRX came out today. Tested with EVE-NG PRO (2.0.4-98) – no issues so far. Happy updating 😉

Yesterday, as part of my JNCIE-SEC Training, I reviewed NAT64 with the following Topology: I pinged from Win to Winserver with Traffic going over Gemini(vSRX 15.1X49-D120), Pisces (vMX 17.3R1-S1.6),… Read more »

The new vSRX15.1X49-D120 is out and of course I already spinned it up with EVE 😉 What should I say – it runs just fine – just like D100 and… Read more »

On my way to JNCIE, NAT64 is also a Topic – below you will find a working example of how I achieved this – comments are welcomed 🙂 Site 1… Read more »

Today I experimented with NAT64 / NAT46 a bit. The Setup to test this is relatively easy: I took 2 Windows-Servers (2008R2), one with only IPv4 and one with only… Read more »

Just tested the new vSRX D100 Version on EVE and ESX. Compared to D90 it feels (tested on ESX and EVE) way slower but seems to run very good once… Read more »

I promised to deliver this and here it is: OSPF over vQFX 😉 These days I lab a lot with EVE and I love it more every day – the… Read more »

The KVM version of the latest vQFX routing engine VM (vqfx10k-re-15_X53-D63) seems to be broken. If you try to run it, it will crash with a kernel9 panic and will… Read more »